Source code editor What Is Ajax

Source code editor What Is Ajax

↑

Source code editor What Is Ajax

Source code editor What Is Ajax

↑

This section is a “How-To” that describes the basics for how to plan, install, configure, and run a MySQL Cluster. Whereas the examples in Section 15.3, “MySQL Cluster Configuration” provide more in-depth information on a variety of clustering options and configuration, the result of following the guidelines and procedures outlined here should be a usable MySQL Cluster which meets the minimum requirements for availability and safeguarding of data.

This section covers hardware and software requirements; networking issues; installation of MySQL Cluster; configuration issues; starting, stopping, and restarting the cluster; loading of a sample database; and performing queries.

Basic Assumptions

This How-To makes the following assumptions:

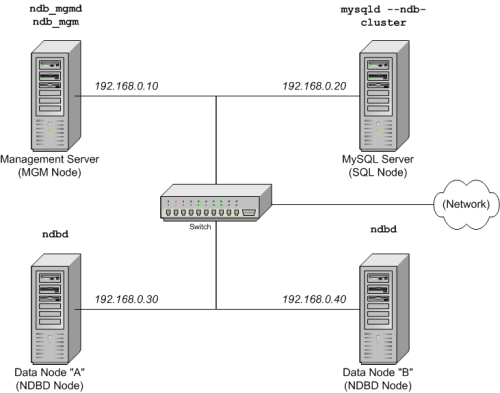

The cluster setup has four nodes, each on a separate host, and each with a fixed network address on a typical Ethernet as shown here:

| Node | IP Address |

| Management (MGM) node | 192.168.0.10 |

| MySQL server (SQL) node | 192.168.0.20 |

| Data (NDBD) node "A" | 192.168.0.30 |

| Data (NDBD) node "B" | 192.168.0.40 |

This may be made clearer in the following diagram:

In the interest of simplicity (and reliability), this How-To uses only numeric IP addresses. However, if DNS resolution is available on your network, it is possible to use hostnames in lieu of IP addresses in configuring Cluster. Alternatively, you can use the /etc/hosts file or your operating system's equivalent for providing a means to do host lookup if such is available.

A common problem when trying to use hostnames for Cluster nodes arises because of the way in which some operating systems (including some Linux distributions) set up the system's own hostname in the /etc/hosts during installation. Consider two machines with the hostnames ndb1 and ndb2, both in the cluster network domain. Red Hat Linux (including some derivatives such as CentOS and Fedora) places the following entries in these machines' /etc/hosts files:

# ndb1 /etc/hosts:

127.0.0.1 ndb1.cluster ndb1 localhost.localdomain localhost# ndb2 /etc/hosts:

127.0.0.1 ndb2.cluster ndb2 localhost.localdomain localhost SuSE Linux (including OpenSuSE) places these entries in the machines' /etc/hosts files:

# ndb1 /etc/hosts:

127.0.0.1 localhost

127.0.0.2 ndb1.cluster ndb1# ndb2 /etc/hosts:

127.0.0.1 localhost

127.0.0.2 ndb2.cluster ndb2 In both instances, ndb1 routes ndb1.cluster to a loopback IP address, but gets a public IP address from DNS for ndb2.cluster, while ndb2 routes ndb2.cluster to a loopback address and obtains a public address for ndb1.cluster. The result is that each data node connects to the management server, but cannot tell when any other data nodes have connected, and so the data nodes appear to hang while starting.

You should also be aware that you cannot mix localhost and other hostnames or IP addresses in config.ini. For these reasons, the solution in such cases (other than to use IP addresses for all config.ini HostName entries) is to remove the fully qualified hostnames from /etc/hosts and use these in config.ini for all cluster hosts.

Each host in our scenario is an Intel-based desktop PC running a common, generic Linux distribution installed to disk in a standard configuration, and running no unnecessary services. The core OS with standard TCP/IP networking capabilities should be sufficient. Also for the sake of simplicity, we also assume that the filesystems on all hosts are set up identically. In the event that they are not, you will need to adapt these instructions accordingly.

Standard 100 Mbps or 1 gigabit Ethernet cards are installed on each machine, along with the proper drivers for the cards, and that all four hosts are connected via a standard-issue Ethernet networking appliance such as a switch. (All machines should use network cards with the same throughout. That is, all four machines in the cluster should have 100 Mbps cards or all four machines should have 1 Gbps cards.) MySQL Cluster will work in a 100 Mbps network; however, gigabit Ethernet will provide better performance.

Note that MySQL Cluster is not intended for use in a network for which throughput is less than 100 Mbps. For this reason (among others), attempting to run a MySQL Cluster over a public network such as the Internet is not likely to be successful, and is not recommended.

For our sample data, we will use the world database which is available for download from the MySQL AB Web site. As this database takes up a relatively small amount of space, we assume that each machine has 256MB RAM, which should be sufficient for running the operating system, host NDB process, and (for the data nodes) for storing the database.

Although we refer to a Linux operating system in this How-To, the instructions and procedures that we provide here should be easily adaptable to other supported operating systems. We also assume that you already know how to perform a minimal installation and configuration of the operating system with networking capability, or that you are able to obtain assistance in this elsewhere if needed.

We discuss MySQL Cluster hardware, software, and networking requirements in somewhat greater detail in the next section. (See Section 15.2.1, “Hardware, Software, and Networking”.)

One of the strengths of MySQL Cluster is that it can be run on commodity hardware and has no unusual requirements in this regard, other than for large amounts of RAM, due to the fact that all live data storage is done in memory. (Note that this is not the case with Disk Data tables, which are implemented in MySQL 5.1; however, we do not intend to backport this feature to MySQL 5.0.) Naturally, multiple and faster CPUs will enhance performance. Memory requirements for other Cluster processes are relatively small.

The software requirements for Cluster are also modest. Host operating systems do not require any unusual modules, services, applications, or configuration to support MySQL Cluster. For supported operating systems, a standard installation should be sufficient. The MySQL software requirements are simple: all that is needed is a production release of MySQL 5.0 to have Cluster support. It is not necessary to compile MySQL yourself merely to be able to use Cluster. In this How-To, we assume that you are using the server binary appropriate to your operating system, available via the MySQL software downloads page at http://dev.mysql.com/downloads/.

For inter-node communication, Cluster supports TCP/IP networking in any standard topology, and the minimum expected for each host is a standard 100 Mbps Ethernet card, plus a switch, hub, or router to provide network connectivity for the cluster as a whole. We strongly recommend that a MySQL Cluster be run on its own subnet which is not shared with non-Cluster machines for the following reasons:

Security: Communications between Cluster nodes are not encrypted or shielded in any way. The only means of protecting transmissions within a MySQL Cluster is to run your Cluster on a protected network. If you intend to use MySQL Cluster for Web applications, the cluster should definitely reside behind your firewall and not in your network's De-Militarized Zone (DMZ) or elsewhere.

Efficiency: Setting up a MySQL Cluster on a private or protected network allows the cluster to make exclusive use of bandwidth between cluster hosts. Using a separate switch for your MySQL Cluster not only helps protect against unauthorized access to Cluster data, it also ensures that Cluster nodes are shielded from interference caused by transmissions between other computers on the network. For enhanced reliability, you can use dual switches and dual cards to remove the network as a single point of failure; many device drivers support failover for such communication links.

It is also possible to use the high-speed Scalable Coherent Interface (SCI) with MySQL Cluster, but this is not a requirement. See Section 15.9, “Using High-Speed Interconnects with MySQL Cluster”, for more about this protocol and its use with MySQL Cluster.

Each MySQL Cluster host computer running data or SQL nodes must have installed on it a MySQL server binary. For management nodes, it is not necessary to install the MySQL server binary, but you do have to install the MGM server daemon and client binaries (ndb_mgmd and ndb_mgm, respectively). This section covers the steps necessary to install the correct binaries for each type of Cluster node.

MySQL AB provides precompiled binaries that support Cluster, and there is generally no need to compile these yourself. Therefore, the first step in the installation process for each cluster host is to download the file mysql-5.0.46-pc-linux-gnu-i686.tar.gz from the MySQL downloads area. We assume that you have placed it in each machine's /var/tmp directory. (If you do require a custom binary, see Section 2.4.14.3, “Installing from the Development Source Tree”.)

RPMs are also available for both 32-bit and 64-bit Linux platforms. For a MySQL Cluster, three (possibly four) RPMs are required:

The Server RPM (for example, MySQL-server-5.0.46-0.glibc23.i386.rpm), which supplies the core files needed to run a MySQL Server.

The Server/Max RPM (for example, MySQL-Max-5.0.46-0.glibc23.i386.rpm), which provides a MySQL Server binary with clustering support. In MySQL 5.0, this is needed only through 5.0.37. After that, the regular server RPM provides the server binary with clustering support.

The NDB Cluster - Storage engine RPM (for example, MySQL-ndb-storage-5.0.46-0.glibc23.i386.rpm), which supplies the MySQL Cluster data node binary (ndbd).

The NDB Cluster - Storage engine management RPM (for example, MySQL-ndb-management-5.0.46-0.glibc23.i386.rpm), which provides the MySQL Cluster management server binary (ndb_mgmd).

In addition, you should also obtain the NDB Cluster - Storage engine basic tools RPM (for example, MySQL-ndb-tools-5.0.46-0.glibc23.i386.rpm), which supplies several useful applications for working with a MySQL Cluster. The most important of the these is the MySQL Cluster management client (ndb_mgm). The NDB Cluster - Storage engine extra tools RPM (for example, MySQL-ndb-extra-5.0.46-0.glibc23.i386.rpm) contains some additional testing and monitoring programs, but is not required to install a MySQL Cluster. (For more information about these additional programs, see Section 15.8, “Cluster Utility Programs”.)

The MySQL version number in the RPM filenames (shown here as 5.0.46) can vary according to the version which you are actually using. It is very important that all of the Cluster RPMs to be installed have the same MySQL version number. The glibc version number (if present — shown here as glibc23), and architecture designation (shown here as i386) should be appropriate to the machine on which the RPM is to be installed.

See Section 2.4.9, “Installing MySQL from RPM Packages on Linux”, for general information about installing MySQL using RPMs supplied by MySQL AB.

After installing from RPM, you still need to configure the cluster as discussed in Section 15.2.3, “Multi-Computer Configuration”.

Note: After completing the installation, do not yet start any of the binaries. We show you how to do so following the configuration of all nodes.

Data and SQL Node Installation — .tar.gz Binary

On each of the machines designated to host data or SQL nodes, perform the following steps as the system root user:

Check your /etc/passwd and /etc/group files (or use whatever tools are provided by your operating system for managing users and groups) to see whether there is already a mysql group and mysql user on the system. Some OS distributions create these as part of the operating system installation process. If they are not already present, create a new mysql user group, and then add a mysql user to this group:

shell>groupadd mysqlshell>useradd -g mysql mysql

The syntax for useradd and groupadd may differ slightly on different versions of Unix, or they may have different names such as adduser and addgroup.

Change location to the directory containing the downloaded file, unpack the archive, and create a symlink to the mysql directory. Note that the actual file and directory names will vary according to the MySQL version number.

shell>cd /var/tmpshell>tar -C /usr/local -xzvf mysql-5.0.46-pc-linux-gnu-i686.tar.gzshell>ln -s /usr/local/mysql-5.0.46-pc-linux-gnu-i686 /usr/local/mysql

Change location to the mysql directory and run the supplied script for creating the system databases:

shell>cd mysqlshell>scripts/mysql_install_db --user=mysql

Set the necessary permissions for the MySQL server and data directories:

shell>chown -R root .shell>chown -R mysql datashell>chgrp -R mysql .

Note that the data directory on each machine hosting a data node is /usr/local/mysql/data. We will use this piece of information when we configure the management node. (See Section 15.2.3, “Multi-Computer Configuration”.)

Copy the MySQL startup script to the appropriate directory, make it executable, and set it to start when the operating system is booted up:

shell>cp support-files/mysql.server /etc/rc.d/init.d/shell>chmod +x /etc/rc.d/init.d/mysql.servershell>chkconfig --add mysql.server

(The startup scripts directory may vary depending on your operating system and version — for example, in some Linux distributions, it is /etc/init.d.)

Here we use Red Hat's chkconfig for creating links to the startup scripts; use whatever means is appropriate for this purpose on your operating system and distribution, such as update-rc.d on Debian.

Remember that the preceding steps must be performed separately on each machine where a data node or SQL node is to reside.

SQL Node Installation — RPM Files

On each machine to be used for hosting a cluster SQL node, install the MySQL RPM by executing the following command as the system root user, replacing the name shown for the RPM as necessary to match the name of the RPM downloaded from the MySQL AB web site:

shell> rpm -Uhv MySQL-server-5.0.46-0.glibc23.i386.rpm This installs the MySQL server binary (mysqld) in the /usr/sbin directory, as well as all needed MySQL Server support files. It also installs the mysql.server and mysqld_safe startup scripts in /usr/share/mysql and /usr/bin, respectively. The RPM installer should take care of general configuration issues (such as creating the mysql user and group, if needed) automatically.

Data Node Installation — RPM Files

On a computer that is to host a cluster data node it is necessary to install only the NDB Cluster - Storage engine RPM. To do so, copy this RPM to the data node host, and run the following command as the system root user, replacing the name shown for the RPM as necessary to match that of the RPM downloaded from the MySQL AB web site:

shell> rpm -Uhv MySQL-ndb-storage-5.0.46-0.glibc23.i386.rpm The previous command installs the MySQL Cluster data node binary (ndbd) in the /usr/sbin directory.

Management Node Installation — .tar.gz Binary

Installation for the management (MGM) node does not require installation of the mysqld binary. Only the binaries for the MGM server and client are required, which can be found in the downloaded archive. Again, we assume that you have placed this file in /var/tmp.

As system root (that is, after using sudo, su root, or your system's equivalent for temporarily assuming the system administrator account's privileges), perform the following steps to install ndb_mgmd and ndb_mgm on the Cluster management node host:

Change location to the /var/tmp directory, and extract the ndb_mgm and ndb_mgmd from the archive into a suitable directory such as /usr/local/bin:

shell>cd /var/tmpshell>tar -zxvf mysql-5.0.46-pc-linux-gnu-i686.tar.gzshell>cd mysql-5.0.46-pc-linux-gnu-i686shell>cp /bin/ndb_mgm* /usr/local/bin

(You can safely delete the directory created by unpacking the downloaded archive, and the files it contains, from /var/tmp once ndb_mgm and ndb_mgmd have been copied to the executables directory.)

Change location to the directory into which you copied the files, and then make both of them executable:

shell>cd /usr/local/binshell>chmod +x ndb_mgm*

Management Node Installation — RPM File

To install the MySQL Cluster management server, it is necessary only to use the NDB Cluster - Storage engine management RPM. Copy this RPM to the computer intended to host the management node, and then install it by running the following command as the system root user (replace the name shown for the RPM as necessary to match that of the Storage engine management RPM downloaded from the MySQL AB web site):

shell> rpm -Uhv MySQL-ndb-management-5.0.46-0.glibc23.i386.rpm This installs the management server binary (ndb_mgmd) to the /usr/sbin directory.

You should also install the NDB management client, which is supplied by the Storage engine basic tools RPM. Copy this RPM to the same computer as the management node, and then install it by running the following command as the system root user (again, replace the name shown for the RPM as necessary to match that of the Storage engine basic tools RPM downloaded from the MySQL AB web site):

shell> rpm -Uhv MySQL-ndb-tools-5.0.46-0.glibc23.i386.rpm The Storage engine basic tools RPM installs the MySQL Cluster management client (ndb_mgm) to the /usr/bin directory.

In Section 15.2.3, “Multi-Computer Configuration”, we create configuration files for all of the nodes in our example Cluster.

For our four-node, four-host MySQL Cluster, it is necessary to write four configuration files, one per node host.

Each data node or SQL node requires a my.cnf file that provides two pieces of information: a connectstring that tells the node where to find the MGM node, and a line telling the MySQL server on this host (the machine hosting the data node) to run in NDB mode.

For more information on connectstrings, see Section 15.3.4.2, “The Cluster Connectstring”.

The management node needs a config.ini file telling it how many replicas to maintain, how much memory to allocate for data and indexes on each data node, where to find the data nodes, where to save data to disk on each data node, and where to find any SQL nodes.

Configuring the Storage and SQL Nodes

The my.cnf file needed for the data nodes is fairly simple. The configuration file should be located in the /etc directory and can be edited using any text editor. (Create the file if it does not exist.) For example:

shell> vi /etc/my.cnfWe show vi being used here to create the file, but any text editor should work just as well.

For each data node and SQL node in our example setup, my.cnf should look like this:

# Options for mysqld process: [MYSQLD] ndbcluster # run NDB storage engine ndb-connectstring=192.168.0.10 # location of management server # Options for ndbd process: [MYSQL_CLUSTER] ndb-connectstring=192.168.0.10 # location of management server

After entering the preceding information, save this file and exit the text editor. Do this for the machines hosting data node “A”, data node “B”, and the SQL node.

Important: Once you have started a mysqld process with the ndbcluster and ndb-connectstring parameters in the [MYSQLD] in the my.cnf file as shown previously, you cannot execute any CREATE TABLE or ALTER TABLE statements without having actually started the cluster. Otherwise, these statements will fail with an error. This is by design.

Configuring the Management Node

The first step in configuring the MGM node is to create the directory in which the configuration file can be found and then to create the file itself. For example (running as root):

shell>mkdir /var/lib/mysql-clustershell>cd /var/lib/mysql-clustershell>vi config.ini

For our representative setup, the config.ini file should read as follows:

# Options affecting ndbd processes on all data nodes:

[NDBD DEFAULT]

NoOfReplicas=2 # Number of replicas

DataMemory=80M # How much memory to allocate for data storage

IndexMemory=18M # How much memory to allocate for index storage

# For DataMemory and IndexMemory, we have used the

# default values. Since the "world" database takes up

# only about 500KB, this should be more than enough for

# this example Cluster setup.

# TCP/IP options:

[TCP DEFAULT]

portnumber=2202 # This the default; however, you can use any

# port that is free for all the hosts in cluster

# Note: It is recommended beginning with MySQL 5.0 that

# you do not specify the portnumber at all and simply allow

# the default value to be used instead

# Management process options:

[NDB_MGMD]

hostname=192.168.0.10 # Hostname or IP address of MGM node

datadir=/var/lib/mysql-cluster # Directory for MGM node log files

# Options for data node "A":

[NDBD]

# (one [NDBD] section per data node)

hostname=192.168.0.30 # Hostname or IP address

datadir=/usr/local/mysql/data # Directory for this data node's data files

# Options for data node "B":

[NDBD]

hostname=192.168.0.40 # Hostname or IP address

datadir=/usr/local/mysql/data # Directory for this data node's data files

# SQL node options:

[MYSQLD]

hostname=192.168.0.20 # Hostname or IP address

# (additional mysqld connections can be

# specified for this node for various

# purposes such as running ndb_restore) (Note: The world database can be downloaded from http://dev.mysql.com/doc/, where it can be found listed under “Examples”.)

After all the configuration files have been created and these minimal options have been specified, you are ready to proceed with starting the cluster and verifying that all processes are running. We discuss how this is done in Section 15.2.4, “Initial Startup”.

For more detailed information about the available MySQL Cluster configuration parameters and their uses, see Section 15.3.4, “Configuration File”, and Section 15.3, “MySQL Cluster Configuration”. For configuration of MySQL Cluster as relates to making backups, see Section 15.7.4, “Configuration for Cluster Backup”.

Note: The default port for Cluster management nodes is 1186; the default port for data nodes is 2202. Beginning with MySQL 5.0.3, this restriction is lifted, and the cluster automatically allocates ports for data nodes from those that are already free.

Starting the cluster is not very difficult after it has been configured. Each cluster node process must be started separately, and on the host where it resides. The management node should be started first, followed by the data nodes, and then finally by any SQL nodes:

On the management host, issue the following command from the system shell to start the MGM node process:

shell> ndb_mgmd -f /var/lib/mysql-cluster/config.ini Note that ndb_mgmd must be told where to find its configuration file, using the -f or --config-file option. (See Section 15.5.3, “ndb_mgmd — The Management Server Process”, for details.)

On each of the data node hosts, run this command to start the ndbd process for the first time:

shell> ndbd --initial Note that it is very important to use the --initial parameter only when starting ndbd for the first time, or when restarting after a backup/restore operation or a configuration change. This is because the --initial option causes the node to delete any files created by earlier ndbd instances that are needed for recovery, including the recovery log files.

If you used RPM files to install MySQL on the cluster host where the SQL node is to reside, you can (and should) use the supplied startup script to start the MySQL server process on the SQL node.

If all has gone well, and the cluster has been set up correctly, the cluster should now be operational. You can test this by invoking the ndb_mgm management node client. The output should look like that shown here, although you might see some slight differences in the output depending upon the exact version of MySQL that you are using:

shell>ndb_mgm-- NDB Cluster -- Management Client -- ndb_mgm>SHOWConnected to Management Server at: localhost:1186 Cluster Configuration --------------------- [ndbd(NDB)] 2 node(s) id=2 @192.168.0.30 (Version: 5.0.46, Nodegroup: 0, Master) id=3 @192.168.0.40 (Version: 5.0.46, Nodegroup: 0) [ndb_mgmd(MGM)] 1 node(s) id=1 @192.168.0.10 (Version: 5.0.46) [mysqld(SQL)] 1 node(s) id=4 (Version: 5.0.46)

Note: The SQL node is referenced here as [mysqld(API)]. This is perfectly normal, and reflects the fact that the mysqld process is acting as a cluster API node.

You should now be ready to work with databases, tables, and data in MySQL Cluster. See Section 15.2.5, “Loading Sample Data and Performing Queries”, for a brief discussion.

Working with data in MySQL Cluster is not much different from doing so in MySQL without Cluster. There are two points to keep in mind:

For a table to be replicated in the cluster, it must use the NDB Cluster storage engine. To specify this, use the ENGINE=NDB or ENGINE=NDBCLUSTER table option. You can add this option when creating the table:

CREATE TABLE tbl_name ( ... ) ENGINE=NDBCLUSTER; Alternatively, for an existing table that uses a different storage engine, use ALTER TABLE to change the table to use NDB Cluster:

ALTER TABLE tbl_name ENGINE=NDBCLUSTER; Each NDB table must have a primary key. If no primary key is defined by the user when a table is created, the NDB Cluster storage engine automatically generates a hidden one. (Note: This hidden key takes up space just as does any other table index. It is not uncommon to encounter problems due to insufficient memory for accommodating these automatically created indexes.)

If you are importing tables from an existing database using the output of mysqldump, you can open the SQL script in a text editor and add the ENGINE option to any table creation statements, or replace any existing ENGINE (or TYPE) options. Suppose that you have the world sample database on another MySQL server that does not support MySQL Cluster, and you want to export the City table:

shell> mysqldump --add-drop-table world City > city_table.sql The resulting city_table.sql file will contain this table creation statement (and the INSERT statements necessary to import the table data):

DROP TABLE IF EXISTS `City`;

CREATE TABLE `City` (

`ID` int(11) NOT NULL auto_increment,

`Name` char(35) NOT NULL default '',

`CountryCode` char(3) NOT NULL default '',

`District` char(20) NOT NULL default '',

`Population` int(11) NOT NULL default '0',

PRIMARY KEY (`ID`)

) ENGINE=MyISAM DEFAULT charset=utf-8;

INSERT INTO `City` VALUES (1,'Kabul','AFG','Kabol',1780000);

INSERT INTO `City` VALUES (2,'Qandahar','AFG','Qandahar',237500);

INSERT INTO `City` VALUES (3,'Herat','AFG','Herat',186800);

(remaining INSERT statements omitted) You will need to make sure that MySQL uses the NDB storage engine for this table. There are two ways that this can be accomplished. One of these is to modify the table definition before importing it into the Cluster database. Using the City table as an example, modify the ENGINE option of the definition as follows:

DROP TABLE IF EXISTS `City`;

CREATE TABLE `City` (

`ID` int(11) NOT NULL auto_increment,

`Name` char(35) NOT NULL default '',

`CountryCode` char(3) NOT NULL default '',

`District` char(20) NOT NULL default '',

`Population` int(11) NOT NULL default '0',

PRIMARY KEY (`ID`)

) ENGINE=NDBCLUSTER DEFAULT charset=utf-8;

INSERT INTO `City` VALUES (1,'Kabul','AFG','Kabol',1780000);

INSERT INTO `City` VALUES (2,'Qandahar','AFG','Qandahar',237500);

INSERT INTO `City` VALUES (3,'Herat','AFG','Herat',186800);

(remaining INSERT statements omitted) This must be done for the definition of each table that is to be part of the clustered database. The easiest way to accomplish this is to do a search-and-replace on the file that contains the definitions and replace all instances of TYPE= or engine_nameENGINE= with engine_nameENGINE=NDBCLUSTER. If you do not want to modify the file, you can use the unmodified file to create the tables, and then use ALTER TABLE to change their storage engine. The particulars are given later in this section.

Assuming that you have already created a database named world on the SQL node of the cluster, you can then use the mysql command-line client to read city_table.sql, and create and populate the corresponding table in the usual manner:

shell> mysql world < city_table.sql It is very important to keep in mind that the preceding command must be executed on the host where the SQL node is running (in this case, on the machine with the IP address 192.168.0.20).

To create a copy of the entire world database on the SQL node, use mysqldump on the non-cluster server to export the database to a file named world.sql; for example, in the /tmp directory. Then modify the table definitions as just described and import the file into the SQL node of the cluster like this:

shell> mysql world < /tmp/world.sqlIf you save the file to a different location, adjust the preceding instructions accordingly.

It is important to note that NDB Cluster in MySQL 5.0 does not support autodiscovery of databases. (See Section 15.10, “Known Limitations of MySQL Cluster”.) This means that, once the world database and its tables have been created on one data node, you need to issue the CREATE SCHEMA world statement (beginning with MySQL 5.0.2, you may use CREATE SCHEMA world instead), followed by FLUSH TABLES on each SQL node in the cluster. This causes the node to recognize the database and read its table definitions.

Running SELECT queries on the SQL node is no different from running them on any other instance of a MySQL server. To run queries from the command line, you first need to log in to the MySQL Monitor in the usual way (specify the root password at the Enter password: prompt):

shell> mysql -u root -p

Enter password:

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 1 to server version: 5.0.46

Type 'help;' or '\h' for help. Type '\c' to clear the buffer.

mysql> We simply use the MySQL server's root account and assume that you have followed the standard security precautions for installing a MySQL server, including setting a strong root password. For more information, see Section 2.4.15.3, “Securing the Initial MySQL Accounts”.

It is worth taking into account that Cluster nodes do not make use of the MySQL privilege system when accessing one another. Setting or changing MySQL user accounts (including the root account) effects only applications that access the SQL node, not interaction between nodes.

If you did not modify the ENGINE clauses in the table definitions prior to importing the SQL script, you should run the following statements at this point:

mysql>USE world;mysql>ALTER TABLE City ENGINE=NDBCLUSTER;mysql>ALTER TABLE Country ENGINE=NDBCLUSTER;mysql>ALTER TABLE CountryLanguage ENGINE=NDBCLUSTER;

Selecting a database and running a SELECT query against a table in that database is also accomplished in the usual manner, as is exiting the MySQL Monitor:

mysql>USE world;mysql>SELECT Name, Population FROM City ORDER BY Population DESC LIMIT 5;+-----------+------------+ | Name | Population | +-----------+------------+ | Bombay | 10500000 | | Seoul | 9981619 | | Sгo Paulo | 9968485 | | Shanghai | 9696300 | | Jakarta | 9604900 | +-----------+------------+ 5 rows in set (0.34 sec) mysql>\qBye shell>

Applications that use MySQL can employ standard APIs to access NDB tables. It is important to remember that your application must access the SQL node, and not the MGM or data nodes. This brief example shows how we might execute the SELECT statement just shown by using PHP 5's mysqli extension running on a Web server elsewhere on the network:

<!DOCTYPE HTML PUBLIC "-//W3C//DTD HTML 4.01 Transitional//EN"

"http://www.w3.org/TR/html4/loose.dtd">

<html>

<head>

<meta http-equiv="Content-Type"

content="text/html; charset=utf-8">

<title>SIMPLE mysqli SELECT</title>

</head>

<body>

<?php

# connect to SQL node:

$link = new mysqli('192.168.0.20', 'root', 'root_password', 'world');

# parameters for mysqli constructor are:

# host, user, password, database

if( mysqli_connect_errno() )

die("Connect failed: " . mysqli_connect_error());

$query = "SELECT Name, Population

FROM City

ORDER BY Population DESC

LIMIT 5";

# if no errors...

if( $result = $link->query($query) )

{

?>

<table border="1" width="40%" cellpadding="4" cellspacing ="1">

<tbody>

<tr>

<th width="10%">City</th>

<th>Population</th>

</tr>

<?

# then display the results...

while($row = $result->fetch_object())

printf(<tr>\n <td align=\"center\">%s</td><td>%d</td>\n</tr>\n",

$row->Name, $row->Population);

?>

</tbody

</table>

<?

# ...and verify the number of rows that were retrieved

printf("<p>Affected rows: %d</p>\n", $link->affected_rows);

}

else

# otherwise, tell us what went wrong

echo mysqli_error();

# free the result set and the mysqli connection object

$result->close();

$link->close();

?>

</body>

</html>We assume that the process running on the Web server can reach the IP address of the SQL node.

In a similar fashion, you can use the MySQL C API, Perl-DBI, Python-mysql, or MySQL AB's own Connectors to perform the tasks of data definition and manipulation just as you would normally with MySQL.

To shut down the cluster, enter the following command in a shell on the machine hosting the MGM node:

shell> ndb_mgm -e shutdown The -e option here is used to pass a command to the ndb_mgm client from the shell. See Section 4.3.1, “Using Options on the Command Line”. The command causes the ndb_mgm, ndb_mgmd, and any ndbd processes to terminate gracefully. Any SQL nodes can be terminated using mysqladmin shutdown and other means.

To restart the cluster, run these commands:

On the management host (192.168.0.10 in our example setup):

shell> ndb_mgmd -f /var/lib/mysql-cluster/config.ini On each of the data node hosts (192.168.0.30 and 192.168.0.40):

shell> ndbd Remember not to invoke this command with the --initial option when restarting an NDBD node normally.

On the SQL host (192.168.0.20):

shell> mysqld &For information on making Cluster backups, see Section 15.7.2, “Using The Management Client to Create a Backup”.

To restore the cluster from backup requires the use of the ndb_restore command. This is covered in Section 15.7.3, “ndb_restore — Restore a Cluster Backup”.

More information on configuring MySQL Cluster can be found in Section 15.3, “MySQL Cluster Configuration”.