Free JavaScript Editor

Ajax Editor

Free JavaScript Editor

Ajax Editor

19.7. ConvolutionCONVOLUTION is a common image processing operation that filters an image by computing the sum of products between the source image and a smaller image called the CONVOLUTION KERNEL or the CONVOLUTION FILTER. Depending on the choice of values in the convolution kernel, a convolution operation can perform blurring, sharpening, noise reduction, edge detection, and other useful imaging operations. Mathematically, the discrete 2D convolution operation is defined as

In this equation, the function F represents the base image, and G represents the convolution kernel. The double summation is based on the width and height of the convolution kernel. We compute the value for a particular pixel in the output image by aligning the center of the convolution kernel with the pixel at the same position in the base image, multiplying the values of the base image pixels covered by the convolution kernel by the values in the corresponding locations in the convolution kernel, and then summing the results. The imaging subset of OpenGL 2.0 contains support for a fixed functionality convolution operation, but implementations of this functionality always have limitations in the size of the kernel supported (typical maximum size is 3 x 3) and in the precision of the intermediate calculations. The flexibility of the OpenGL Shading Language enables convolution operations with arbitrary-sized kernels and full floating-point precision. Furthermore, the convolution operation can be written in an OpenGL shader such that the minimum number of multiplication operations is actually performed (i.e., kernel values equal to 0 do not need to be stored or used in the convolution computation). But there are a couple of hurdles to overcome. First, this operation seems naturally suited to implementation as a fragment shader, but the fragment shader is not allowed to access the values of any neighboring fragments. How can we perform a neighborhood operation such as convolution without access to the "neighborhood"? The answer to this dilemma is that we store the base image in texture memory and access it as a texture. We can draw a screen-aligned rectangle that's the same size on the screen as the base image and enable texturing to render the image perfectly. We can introduce a fragment shader into this process, and instead of sampling the image just once during the texturing process, we can access each of the values under the convolution kernel and compute the convolution result at every pixel. A second hurdle is that, although the OpenGL Shading Language supports loops, even nested loops, it does not currently support two-dimensional arrays. We can easily overcome this by "unrolling" the convolution kernel and storing it as a one-dimensional array. Each location in this array stores an x- and y-offset from the center of the convolution kernel and the value of the convolution kernel at that position. In the fragment shader, we process this array in a single loop, adding the specified offsets to the current texture location, accessing the base image, and multiplying the retrieved pixel value by the convolution kernel value. Storing the convolution kernel this way means that we can store the values in row major order, column major order, backwards, or all mixed up. We don't even need to include convolution kernel values that are zero, because they do not contribute to the convolved image. The interesting work for performing convolution is done with fragment shaders. The vertex shader is required to perform an identity mapping of the input geometry (a screen-aligned rectangle that is the size of the base image), and it is required to pass on texture coordinates. The texture coordinate (0,0) should be assigned to the lower-left corner of the rectangle, and the texture coordinate (1,1) should be assigned to the upper-right corner of the rectangle. One additional issue with convolution operations is deciding what to do when the convolution kernel extends beyond the edges of the base image. A convenient side effect of using OpenGL texture operations to perform the convolution is that the texture-wrapping modes defined by OpenGL map nicely to the common methods of treating convolution borders. Thus,

The texture filtering modes should be set to GL_NEAREST to avoid unintended interpolation. 19.7.1. SmoothingImage smoothing operations can attenuate high frequencies in an image. A common image smoothing operation is known as NEIGHBORHOOD AVERAGING. This method uses a convolution kernel that contains a weighting of 1.0 in each location. The final sum is divided by a value equal to the number of locations in the convolution kernel. For instance, a 3 x 3 neighborhood averaging convolution filter would look like this:

The resulting sum would be divided by 9 (or multiplied by 1/9). Neighborhood averaging, as the name implies, has the effect of averaging all the pixels in a region with equal weighting. It effectively smears the value of each pixel to its neighbors, resulting in a blurry version of the original image. Because all the elements of the convolution kernel are equal to 1, we can write a simplified fragment shader to implement neighborhood averaging (see Listing 19.7). This shader can be used for neighborhood averaging for any kernel size where width * height is less than or equal to 25 (i.e., up to 5 x 5). The results of this operation are shown in Figure 19.1 (B). Figure 19.1. Results of various convolution operations

Listing 19.7. Fragment shader for the neighborhood averaging convolution operation

Image smoothing by means of convolution is often used to reduce noise. This works well in regions of solid color or intensity, but it has the side effect of blurring high frequencies (edges). A convolution filter that applies higher weights to values nearer the center can do a better job of eliminating noise while preserving edge detail. Such a filter is the Gaussian filter, which can be encoded in a convolution kernel as follows:

Listing 19.8 contains the code for a more general convolution shader. This shader can handle convolution kernels containing up to 25 entries. In this shader, each convolution kernel entry is expected to have been multiplied by the final scale factor, so there is no need to scale the final sum. Listing 19.8. Fragment shader general convolution computation

The original image in Figure 19.1 (A) has had uniform noise added to it to create the image in Figure 19.1 (C). The Gaussian smoothing filter is then applied to this image to remove noise, and the result is shown in Figure 19.1 (D). Notice in particular that the noise has been significantly reduced in areas of nearly constant intensity. As the size of the convolution kernel goes up, the number of texture reads that is required increases as the square of the kernel size. For larger kernels, this can become the limiting factor for performance. Some kernels, including the Gaussian kernel just described, are said to be separable because the convolution operation with a width x height kernel can be performed as two passes, with one-dimensional convolutions of size width x 1 and 1 x height. With this approach, there is an extra write for each pixel (the result of the first pass), but the number of texture reads is reduced from width x height to width + height. 19.7.2. Edge DetectionAnother common use for the convolution operation is edge detection. This operation is useful for detecting meaningful discontinuities in intensity level. The resulting image can aid in image comprehension or can enhance the image in other ways. One method for detecting edges involves the Laplacian operator.

We can plug the Laplacian convolution kernel into the fragment shader shown in Listing 19.8. This results in the image shown in Figure 19.2. Figure 19.2. Edge detection with the Laplacian convolution kernel (image scaled for display)

19.7.3. SharpeningA common method of image sharpening is to add the results of an edge detection filter back onto the original image. To control the degree of sharpening, a scaling factor scales the edge image as it is added. One way to sharpen an image is with the negative Laplacian operator. This convolution filter is defined as

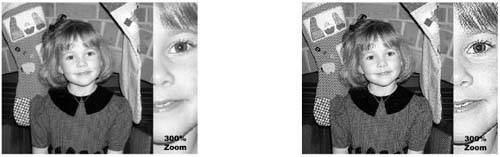

The fragment shader that implements image sharpening in this fashion is almost identical to the general convolution shader shown in the previous section (see Listing 19.9). The only difference is that the result of the convolution operation is added to the original image. Before it is added, the convolution result is scaled by a scale factor provided by the application through a uniform variable. The results of unsharp masking are shown in Figure 19.4 and Figure 19.3. Figure 19.3. Results of the unsharp masking shader. Original image is on the left, sharpened image on the right.

Figure 19.4. Results of the unsharp masking shader. (Laplacian image in center is scaled for display.) Zoomed views of the original and resulting images are shown in Figure 19.3.

Listing 19.9. Fragment shader for unsharp masking

|

Ajax Editor

JavaScript Editor

Ajax Editor

JavaScript Editor