Free JavaScript Editor

Ajax Editor

Free JavaScript Editor

Ajax Editor

14.3. BRDF ModelsThe traditional OpenGL reflectance model and the one that we have been using for most of the previous shader examples in this book (see, for example, Section 6.2) consists of three components: ambient, diffuse, and specular. The ambient component is assumed to provide a certain level of illumination to everything in the scene and is reflected equally in all directions by everything in the scene. The diffuse and specular components are directional in nature and are due to illumination from a particular light source. The diffuse component models reflection from a surface that is scattered in all directions. The diffuse reflection is strongest where the surface normal points directly at the light source, and it drops to zero where the surface normal is pointing 90° or more away from the light source. Specular reflection models the highlights caused by reflection from surfaces that are mirrorlike or nearly so. Specular highlights are concentrated on the mirror direction. But relatively few materials have perfectly specular (mirrorlike) or diffuse (Lambertian) reflection characteristics. To model more physically realistic surfaces, we must go beyond the simplistic lighting/reflection model that is built into OpenGL. This model was developed empirically and is not physically accurate. Furthermore, it can realistically simulate the reflection from only a relatively small class of materials. For more than two decades, computer graphics researchers have been rendering images with more realistic reflection models called BIDIRECTIONAL REFLECTANCE DISTRIBUTION FUNCTIONS, or BRDFS. A BRDF model for computing the reflection from a surface takes into account the input direction of incoming light and the outgoing direction of reflected light. The elevation and azimuth angles of these direction vectors are used to compute the relative amount of light reflected in the outgoing direction (the fixed functionality OpenGL model uses only the elevation angle). A BRDF model renders surfaces with ANISOTROPIC reflection properties (i.e., surfaces that are not rotationally invariant in their surface reflection properties). Instruments have been developed to measure the BRDF of real materials. In some cases, the measured data has been used to create a function with a few parameters that can be modified to model the reflective characteristics of a variety of materials. In other cases, the measured data has been sampled to produce texture maps that reconstruct the BRDF function at runtime. A variety of different measuring, sampling, and reconstruction methods have been devised to use BRDFs in computer graphics, and this is still an area of active research. Generally speaking, the amount of light that is reflected to a particular viewing position depends on the position of the light, the position of the viewer, and the surface normal and tangent. If any of these changes, the amount of light reflected to the viewer may also change. The surface characteristics also play a role because different wavelengths of light may be reflected, transmitted, or absorbed, depending on the physical properties of the material. Shiny materials have concentrated, near-mirrorlike specular highlights. Rough materials have specular highlights that are more spread out. Metals have specular highlights that are the color of the metal rather than the color of the light source. The color of reflected light may change as the reflection approaches a grazing angle with the surface. Materials with small brush marks or grooves reflect light differently as they are rotated, and the shapes of their specular highlights also change. These are the types of effects that BRDF models are intended to accurately reproduce. A BRDF is a function of two pairs of angles as well as the wavelength and polarization of the incoming light. The angles are the altitude and azimuth of the incident light vector (qi, fi) and the altitude and azimuth of the reflected light vector (qr, fr). Both sets of angles are given with respect to a given tangent vector. For simplicity, some BRDF models omit polarization effects and assume that the function is the same for all wavelengths. Because the incident and reflected light vectors are measured against a fixed tangent vector in the plane of a surface, BRDF models can reproduce the reflective characteristics of anisotropic materials such as brushed or rolled metals. And because both the incident and reflected light vectors are considered, BRDF models can also reproduce the changes in specular highlight shapes or colors that occur when an object is illuminated by a light source at a grazing angle. BRDF models can either be theoretical or empirical. Theoretical models attempt to model the physics of light and materials in order to reproduce the observed reflectance properties. In contrast, an empirical model is a function with adjustable parameters that is designed to fit measured reflectance data for a certain class of materials. The volume of measured data typically prohibits its direct use in a computer graphics environment, and this data is often imperfect or incomplete. Somehow, the measured data must be boiled down to a few useful values that can be plugged into a formula or used to create textures that can be accessed during rendering. A variety of methods for reducing the measured data have been developed. One such model was described by Greg Ward in a 1992 SIGGRAPH paper. He and his colleagues at Lawrence Berkeley Laboratory built a device that was relatively efficient in collecting reflectance data from a variety of materials. The measurements were the basis for creating a mathematical reflectance model that provided a reasonable approximation to the measured data. Ward's goal was to produce a simple empirical formula that was physically valid and fit the measured reflectance data for a variety of different materials. Ward measured the reflectivity of various materials to determine a few key values with physical meaning and plugged those values into the formula he developed to replicate the measured data in a computer graphics environment. To understand Ward's model, we should first review the geometry involved, as shown in Figure 14.3. This diagram shows a point on a surface and the relevant direction vectors that are used in the reflection computation: Figure 14.3. The geometry of reflection

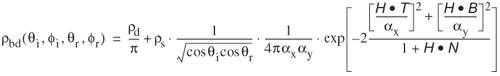

The formula developed by Ward is based on a Gaussian reflectance model. The key parameters of the formula are the diffuse reflectivity of the surface (rd), the specular reflectivity of the surface (rs), and the standard deviation of the surface slope (a). The final parameter is a measure of the roughness of a surface. The assumption is that a surface is made up of tiny microfacets that reflect in a specular fashion. For a mirrorlike surface, all the microfacets line up with the surface. For a rougher surface, some random orientation of these microfacets causes the specular highlight to spread out more. The fraction of facets that are oriented in the direction of H is called the facet slope distribution function, or the surface slope. Several possibilities for this function have been suggested. With but a single value for the surface slope, the mathematical model is limited in its ability to reproduce materials exhibiting anisotropic reflection. To deal with this, Ward's model includes two a values, one for the standard deviation of the surface slope in the x direction (i.e., in the direction of the surface tangent vector T) and one for the standard deviation of the surface slope in the y direction (i.e., in the direction of the surface binormal value B). The formula used by Ward to fit his measured reflectance data and the key parameters derived from that data is  This formula looks a bit onerous, but Ward has supplied values for rd, rs, ax, and ay for several materials, and all we need to do is code the formula in the OpenGL Shading Language. The result of this BRDF is plugged into the overall equation for illumination, which looks like this

This formula basically states that the reflected radiance is the sum of a general indirect radiance contribution, plus an indirect semispecular contribution, plus the radiance from each of N light sources in the scene. I is the indirect radiance, Ls is the radiance from the indirect semispecular contribution, and Li is the radiance from light source i. For the remaining terms, wi is the solid angle in steradians of light source i, and rbd is the BRDF defined in the previous equation. This all translates quite easily into OpenGL Shading Language code. To get higher-quality results, we compute all the vectors in the vertex shader, interpolate them, and then perform the reflection computations in the fragment shader. The application is expected to provide four attributes for every vertex. Two of them are standard OpenGL attributes and need not be defined by our vertex program: gl_Vertex (position) and gl_Normal (surface normal). The other two attributes are a tangent vector and a binormal vector, which the application computes. These two attributes should be provided to OpenGL with either the glVertexAttrib function or a generic vertex array. The location to be used for these generic attributes can be bound to the appropriate attribute in our vertex shader with glBindAttribLocation. For instance, if we choose to pass the tangent values in vertex attribute location 3 and the binormal values in vertex attribute location 4, we would set up the binding with these lines of code: glBindAttribLocation(programObj, 3, "Tangent"); glBindAttribLocation(programObj, 4, "Binormal"); If the variable tangent is defined to be an array of three floats and binormal is also defined as an array of three floats, we can pass in these generic vertex attributes by using the following calls: glVertexAttrib3fv(3, tangent); glVertexAttrib3fv(4, binormal); Alternatively, we could pass these values to OpenGL by using generic vertex arrays. Listing 14.6 contains the vertex shader. Its primary job is to compute and normalize the vectors shown in Figure 14.3, namely, the unit vectors N, L, V, H, R, T, and B. We compute the values for N, T, and B by transforming the application-supplied normal, tangent, and binormal into eye coordinates. We compute the reflection vector R by using the built-in function reflect. We determine L by normalizing the direction to the light source. Because the viewing position is defined to be at the origin in eye coordinates, we compute V by transforming the viewing position into eye coordinates and subtracting the surface position in eye coordinates. H is the normalized sum of L and V. All seven of these values are stored in varying variables that will be interpolated and made available to the fragment shader. Listing 14.6. Vertex shader for rendering with Ward's BRDF model

It is then up to the fragment shader to implement the equations defined previously. The values that parameterize a material (rd, rs, ax, ay) are passed as the uniform variables P and A. We can use the values from the table published in Ward's paper or try some values of our own. The base color of the surface is also passed as a uniform variable (Ward's measurements did not include color for any of the materials). Instead of dealing with the radiance and solid angles of light sources, we just use a uniform variable to supply coefficients that manipulate these terms directly. The vectors passed as varying variables become denormalized during interpolation, but if the polygons in the scene are all relatively small, this effect is hard to notice. For this reason, we can usually skip the step of renormalizing these values in the fragment shader. The first three lines of code in the fragment shader (Listing 14.7) compute the expression in the exp function from Ward's BRDF. The next two lines obtain the necessary cosine values by computing the dot product of the appropriate vectors. We then use these values to compute the value for brdf, which is the same as rbd in the equations above. The next equation puts it all together into an intensity value that attenuates the base color for the surface. The attenuated value becomes the final color for the fragment. Listing 14.7. Fragment shader for rendering with Ward's BRDF model

Some results from this shader are shown in Color Plate 23. It certainly would be possible to extend the formula and surface parameterization values to operate on three channels instead of just one. The resulting shader could be used to simulate materials whose specular highlight changes color depending on the viewing angle. |

Ajax Editor

JavaScript Editor

Ajax Editor

JavaScript Editor